To increase crawlability, obtainability, and performance, your single-page app must be:

- Using server-side rendering or prerendering to provide search crawlers with fully rendered HTML content.

- Optimized as much as possible for performance, especially on mobile, to decrease perceived latency.

- Organized with a clear, clean structure for SEO-friendly URLs.

- Tested regularly with SEO and performance monitoring tools.

SPA Developers must use workarounds to overcome SEO issues with various workarounds, for example:

- Prerender.io

- isomorphic JavaScript

- Headless Chrome

- Preact

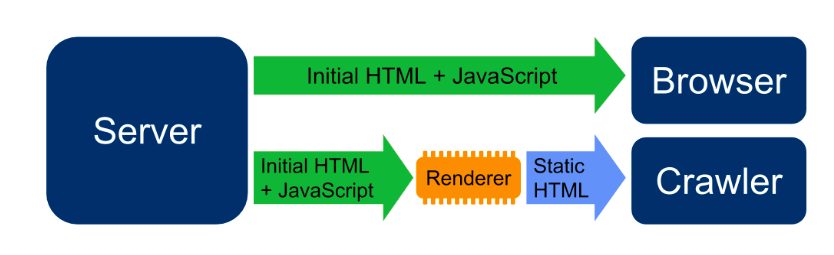

prerender.io along with Angular serves fully-rendered pages to the crawler.

isomorphic JavaScript, sometimes called “Universal JavaScript”, where a page can be generated on the server and sent to the browser, which can immediately render and display the page. This solves the SEO issues as Google doesn’t have to execute and render the JavaScript in the indexer.

Headless Chrome is another option recently proposed as an easy solution by a Google engineer, who also mentions another solution called Preact, which ships with server-side rendering.

It’s also a good idea to create a properly formatted XML Sitemap and submit that to Google Search Console.

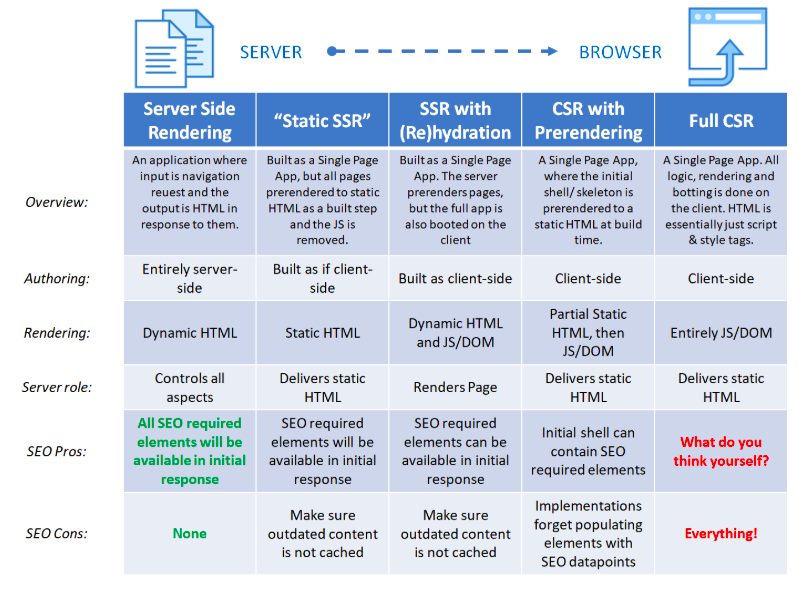

Prerendering vs. Server Side Rendering

| Pre-rendering is a tradeoff between client-side and server-side rendering. Every pre-rendered page displays a skeleton template while the data waits to be rehydrated with AJAX/XHR requests. Once the page is fetched, internal routing is done dynamically to take advantage of a client-side rendered website.

It discriminates between real users and search crawlers. Your SPA runs with a headless browser and generates a “Googlebot-ready,” fully rendered HTML version of your app. That version is stored on your web server. When a request hits it, the server checks if it’s coming from a real user (A) or Googlebot (B): A – Serves your SPA straight up B – Serves the prerendered static files Prerendering with React [tutorial] This guide shows you how to handle prerendering with Create React App and react-snapshot. If it’s static, pre-render should be enough for you. But if it depends on other APIs, the content could change during runtime, and you would have to do true SSR to accommodate that. SSR is more resource intensive on the server though. As for the after login part, because it probably shouldn’t be crawled by bots anyway, it is okay to do CSR for all the logged in pages. CSR alone doesn’t mean you will have a significantly faster initial load though, there is a lot of factor to consider such as the HTML document size, network trip latency, the response time of the other services your own service is depending on, etc. BUT, along with using a service worker and using the app-shell model, CSR should almost always be faster compared to SSR. I would recommend looking into that to improve CSR speed. Link Prerendering can be too slow and costly. In my experience, the simple lesson learned is that these solutions have their limits, and if getting SEO right is a critical component in the grand picture, SSR may likely be the ideal choice. |

Common Problems With SPA and Recommended solutions

Solution

| Problem With SPA | Solution 1 | Solution 2 |

| Slow 1st time load | ||

| Only one URL for the whole website | Today, the general, SEO-friendly method of dealing with SPA URLs is to leverage the History API and the pushState() method in-browser. It lets you fetch async resources AND update “clean” URLs without fragment identifiers.

→ The MDN Web Docs has a solid entry on the subject. Popular open-source routing libraries for React, Vue, and Angular all use the pushState() method under the hood. Clean URLs will also facilitate link building efforts and organic acquisition of backlinks. Last but not least: a clean URL architecture will make for a MUCH better time analyzing data in Google Analytics. |

|

| Perceived latency | When Googlebot hits render-blocking JS files and can harm your SPA SEO. Especially if it happens on mobile—remember, mobile-first indexing! And a slow, incomplete mobile experience will harm your SEO, whether you’re building a single-page application or any other type of website. For SPA though, a big ol’ JS file is often nested at the top of your HTML. This creates potential bottlenecks to page rendering. Your goal? Make your code load as fast as possible through the critical rendering path: |

A geographically distributed network of servers will allow for faster file delivery, wherever the request is coming from.

Smaller asset sizes are always good, whether they’re loaded async or not.

Ask yourself: do ALL resources need to be loaded with the initial page load? |

| Google won’t be seeing and capturing your most recent content. | make sure that the SSR engine always provides an up-to-date version of the URL, otherwise Google won’t be seeing and capturing your most recent articles, and you’ll be missing out on valuable traffic. |

Conclusion

Right now, there doesn’t appear to be any single solution or a paint-by-numbers approach to handling the problems you may encounter in SEO for SPA. Prerendering can be too slow and costly. SSR seems to be the best option available considering all the factors mentioned above.